1、基于角色的RBAC控制

Context:

您已经被要求为部署管道创建一个新的ClusterRole,并将其绑定到特定namespace内的特定ServiceAccount。

Task:

创建一个新的名为deployment-clusterrole的ClusterRole,它只允许创建以下资源类型:

Deployment

StatefulSet

DaemonSet

在现有的名称空间app-team1中创建一个名为cicd-token的新ServiceAccount

将新的ClusterRole deployment-clusterrole绑定到新的ServiceAccount cicd-token中,仅限于命名空间app-team1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 root@master1:~

2、节点维护:指定节点不可用 在官网文档搜索Safely Drain a Node

中文翻译:

将ek8s-node-1节点设置为不可用,然后重新调度该节点上的所有的pod

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 root@master1:~

3、k8s版本升级 官网搜索kubeadm upgrade,考试是从1.23升级到1.24

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 root@master1:~false exit exit

4、Etcd备份还原

在做题之前确认自己处在student@node-1 下

1 2 3 4 5 6 7 8 export ETCDCTL_API=3

5、networkpolicy网络策略

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 echo echo project=echo echo

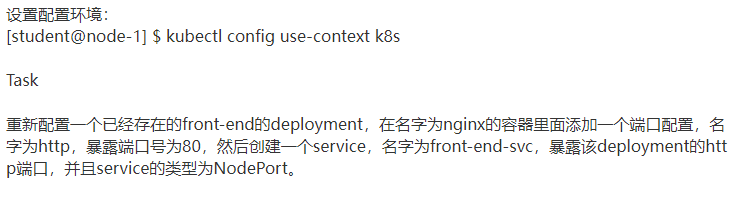

6、四层负载均衡-service

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 type : NodePort

7、七层负载均衡ingress

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 kubectl config use-context k8s"nginx"

8、deployment实现pod的扩容缩容

1 kubectl scale --replicas=3 deloyment/loadbalancer

9、Pod指定节点调度

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 root@master1:~

10、检查ready节点的数量

1 2 3 4 5 6 7 8 9 root@master1:~

11、一个pod封装多个容器

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 root@master1:~

12、持久化存储卷PersistentVolume

1 2 3 4 5 6 7 8 9 10 11 12 root@master1:~

13、PersistentVolumeClaim

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 root@master1:~"/usr/share/nginx/html"

14、查看pod日志

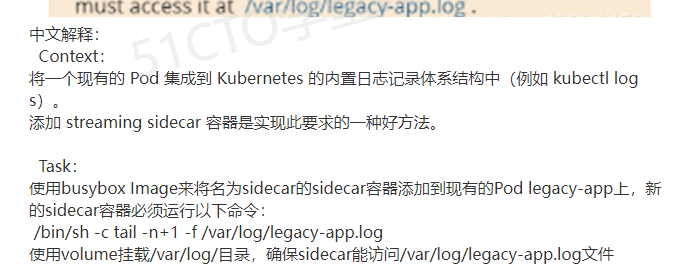

15、Sidecar代理

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 while true ;do echo "$(date) INFO $i " >> /var/log /legacy-app.log;1 ));done log "i=0; while true; do\n echo \"$(date) INFO $i \" >> /var/log/legacy-app.log;\n \ i=$((i+1) );\n sleep 1;\ndone \n" log 'tail -n+1 -F /var/log/legacy-app.log' ]log

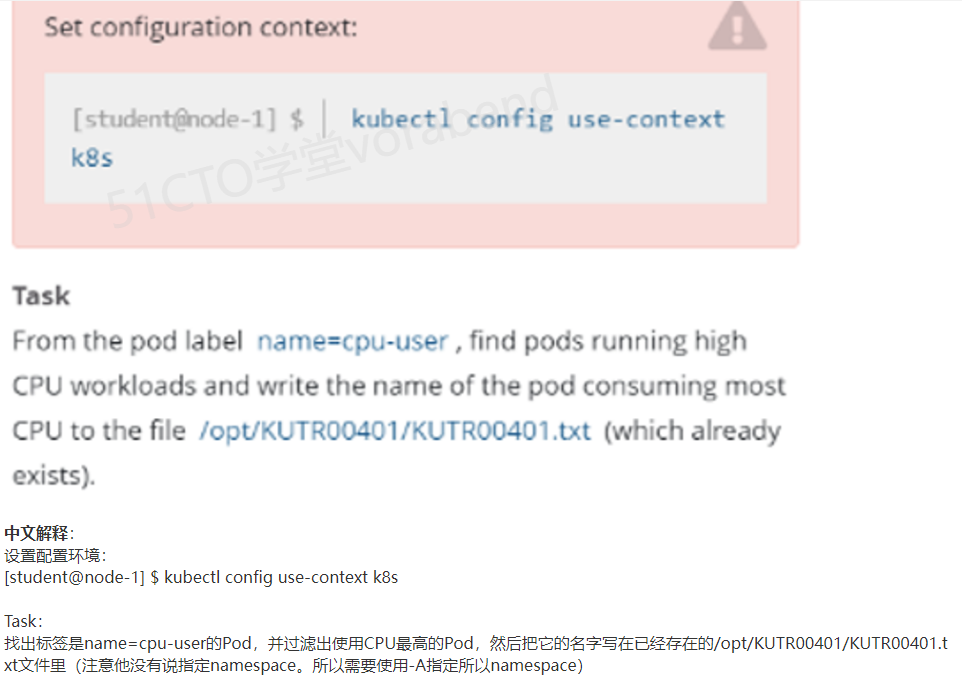

16、查看pod cpu的使用率

1 2 3

17、集群故障排查